August 16, 2025.

As recounted by CEO Satya Nadella, the Microsoft public cloud service provider Azure revenue surpassed $75B, up 34% year over year (yoy), taking share every quarter. Azure and other cloud services revenue, as reported in the earnings call slides, grew 39% yoy. Nadella said Microsoft continued to lead the AI infrastructure wave, now with over 400 datacenters, in over 70 regions, more than any other provider.

Microsoft stood up over 2 GW of new datacenter capacity in the last 12 months. 1 GW would supply roughly 800,000 homes in the US. This shows how much new power supply the AI revolution is requiring.

Data:

Knowledge workers require access to relevant data produced by their enterprise, in order to put it to work using applications. In enterprises whose primary function is not data science, access to data must be provided while enabling unified management and relatively low learning curve. Microsoft Fabric is becoming the complete data and analytics platform for the AI era. Fabric enables access to all of the enterprise data in one application using OneLake, and integrates with the Microsoft ecosystem. Revenue was up 55% yoy, now with over 25,000 customers.

Microsoft also supports third party data analytics platforms on Azure. Azure Databricks and Snowflake on Azure are also growing. Cosmos DB and PostgreSQL both play crucial roles in operation of critical OpenAI ChatGPT applications.

In the area of Data, Microsoft continues to evolve crucial third party data management tools on Azure. While continuing its close partnership with Databricks, it is growing Fabric as an accessible and versatile tool that adds value to the existing Microsoft software ecosystem, in this way nurturing its competitive advantage of switching costs. Customers should be able to access the resources they need to evolve innovative applications, while finding the new capabilities they seek, within the ecosystem.

Agentic AI

This year Microsoft launched Azure AI Foundry to help customers design, customize, and manage AI applications and agents, at scale.

Customers increasingly want to use multiple AI models to meet their specific performance, cost, and use case requirements. Foundry enables them to provision inferencing throughput once and apply it across more models than any other hyper scale cloud provider, including models from OpenAI, DeepSeek, Meta, xAI’s Grok, and very soon, Black Forest Labs and Mistral AI.

Azure AI Foundry includes the Foundry Agent Service, now being used by 14,000 customers to build agents that automate complex tasks.

As a specific measure of usage, the number of tokens served by Foundry APIs, exceeded 500 trillion this year, up over 7X.

The family of Copilot apps surpassed 100 million monthly active users across commercial and consumer.

Hundreds of partners like Adobe, SAP, ServiceNow, and Workday have built their own third-party agents that integrate with Copilot and Teams. Also, customers use Copilot Studio to extend Microsoft 365 Copilot and build their own agents. Customers created 3 million such agents using SharePoint and Copilot Studio this year.

GitHub Copilot users have reached 20 million. GitHub Copilot Enterprise customers increased 75% quarter over quarter.

In healthcare, Dragon Copilot usage is surging. Customers used ambient AI solutions to document over 13 million physician-patient encounters this quarter, up nearly 7X year-over-year. In a typical use case the copilot creates a progress note of the patient encounter based on the dialogue between the physician and the patient. The physician is relieved of the administrative work of writing the note after the patient has left.

CEO Nadella stated that revenue from Azure AI services was generally in line with expectations. And, “while we brought additional datacenter capacity online this quarter, demand remains higher than supply.”

As legacy applications transition to Agentic AI, Microsoft is adding productivity and capability to its ecosystem, while enabling new capabilities, such as relieving doctors of admin work! The success of this strategy is seen in the growth of usage of the various applications, from Dragon AI to Fabric for the enterprise, to Agentic AI for large scale customer service organizations. But this success is based on more than successful usage. It translates into cash flow, the key indicator of a truly successful business.

The reason for this ability to translate sales into cashflow, is one of the central competitive advantages of Microsoft: scale. This has two components. First, is the availability of captive customers. When a new capability, for example Fabric, is launched, sales are efficiently executed across a massive number of existing ecosystem customers. In addition, software vendor partners multiply this. That is, the new source of revenue is efficiently scaled across a large number of receptive customers. Scale enables the company to be able to afford the cost of development of the product. Costs which include for example employing engineers, and building or modifying datacenter compute.

Second, the costs of incremental new business is quite low for Microsoft’s software business. There is a minimal additional cost of selling the 2nd or 3rd or 100th instance of the software. We refer to the costs of development of the software products as being primarily fixed. The cost of selling incremental additional volumes of the software, which might include setting up additional technical support services, which can serve customers globally from one center, are relatively low. These are called variable costs.

Compare with a company like Starbucks. Each new store that Starbucks sets up, requires investment in real estate, new worker recruitment, training, and logistics for the coffee and food products. That store can sell only in its own geographic location. We seek to invest in companies with a scale competitive advantage, and fixed costs outweighing the variable costs.

The competitive advantage of scale, is what drives high Gross Margin. For a company where Salary, General and Administrative costs are well controlled, and debt is conservative, therefore generating low Interest Expense, this means that Operating Cash Flow continues to grow, to supply the Capex which enables the company to readily exploit emerging markets.

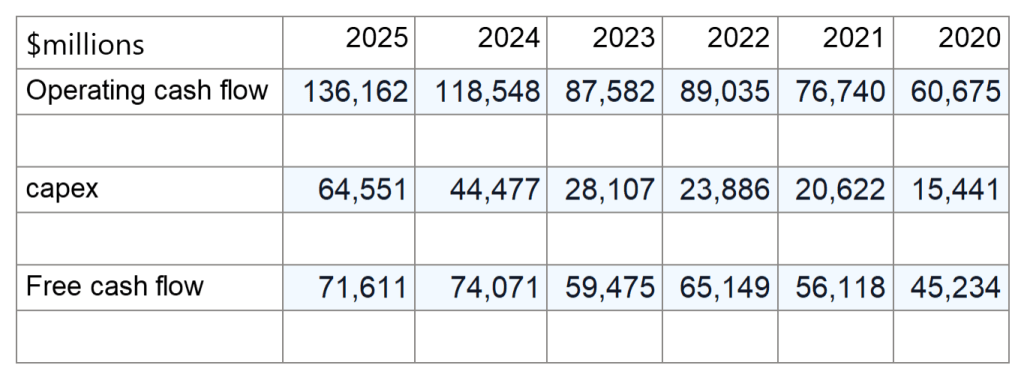

This cash production is impressively made manifest when we consider the ability of Microsoft to ramp up its capital expenditure to eye popping amounts in recent years, while maintaining large amounts of cash flow.

The table above shows Operating cash flow, capex (PPE expense) and free cash flow for Microsoft, from FY 2020 through 2025. As shown, Microsoft was able to more than triple capex from 15.4B in 2020 to $64.55B in 2025, while maintaining free cash flow. While free cash flow decreased slightly from $74B in 2024 to $71.6B in 2025, it is still ample to supply the company’s needs. Out of Free cash flow of $71.6B in 2025, Microsoft paid approximately $24B in dividends, repurchased $18.4B in common stock, and had $2.4B indebt interest expense.

In comparison, a startup company supplying an analogous product, has a cost of sales and marketing to purchase each new customer. That’s after building or paying for compute capacity. Furthermore, it must supply at a lower price in order to attract customers who would otherwise use the more complete suite available from Microsoft. This special effort to take customers from the large scale provider is necessary because the startup lacks the other, more fundamental competitive advantage that Microsoft has, that of switching costs. Its customers are (not unwilling) captive customers. Switching costs are sustained by the continuous research and development of increased value provision in the product ecosystem. Ecosystem customers find it more economic to remain in the system. This never ending evolution of the product sustains the customer base, and is essential to provide the basis for scale.

Outlook for FY 2026:

CFO Amy Hood gave the outlook for the coming quarter and fiscal year.

“Capital expenditure growth, as we shared last quarter, will moderate compared to FY25 with a greater mix of short-lived assets. Due to the timing of delivery of additional capacity in H1 (first half of year), including large finance lease sites, we expect growth rates in H1 will be higher than in H2. Revenue will continue to be driven by Azure.

In Azure, we expect Q1 revenue growth of approximately 37% in constant currency driven by strong demand for our portfolio of services on a significant base. Even as we continue bringing more datacenter capacity online, we currently expect to remain capacity constrained through the first half of our fiscal year”.

In summary, as aggressive but prudent expansion of Azure infrastructure and software tools enables the expansion of data analytics platforms and agentic AI, Microsoft continues to advance as an indispensable host and enabler of the AI transformation, while further enriching its ecosystem.