March 3rd, 2025. On February 26, 2025, Nvidia announced Financial Results for the 4th Quarter and Fiscal Year 2025, which ended on Jan 26. Note that since Nvidia’s Fiscal Year ends in January, it is termed the Fiscal Year of the new year, even though most of the financial events included, transpired during the preceding calendar year.

Recently the market has apparently been concerned with certain key issues. There is a widespread interest in investing in AI compute, and adapting workflows in many industries to employ AI applications in order to boost productivity. But there is uncertainty regarding the persistence of high levels of demand for Nvidia GPUs needed to produce AI compute. Another issue relates to whether the return on investment (ROI) on the high levels of capex required to build AI related datacenters, will justify the investment.

We can address these issues with information contained in the commentary of CFO Colette Kress, with full narrative available on the webcast, from which I quote liberally in the following outline of financial and operating results.

Revenue for Q4 was $39.3billion, exceeding management’s outlook of $37.5B. This was up 12% sequentially, and up 78% year over year (yoy). For the entire FY 2025, revenue was $130.5B, up 114%, yoy. Over 88% of this was for Data Center, which reflects the demand for AI related compute.

Data center revenue for fiscal 2025 was $115.2 billion, up 142% from the prior year. In Q4, Data Center revenue of $35.6 billion was up 16% sequentially and 93% year-on-year.

The most current GPU in production is the Grace-Blackwell, which was introduced at Nvidia GTC (GPU Technology Conference) 2024, in March 2024. Last August there had been concern among analysts with delays in Blackwell production. The issue of inadequate manufacturing yield was subsequently successfully addressed. Currently, this is the fastest product ramp in the company’s history, unprecedented in its speed and scale. Blackwell production is in full gear across multiple configurations for varying datacenter architectures. In Q4, Blackwell sales exceeded management expectations. Nvidia delivered $11 billion of Blackwell revenue in Q4 to meet strong demand. Grace Blackwell systems have been installed by Nvidia for its own development efforts, as well as by other notable customers including Microsoft, CoreWeave and OpenAI.

Datacenter revenue includes the categories of Compute, and Networking. Of Q4 Data Center revenue of $35.580B, Compute accounted for $32.556B (91.5%), with the remainder being Networking. Q4 Compute revenue jumped 18% sequentially and over 116% year-on-year. Customers are racing to scale infrastructure to train the next generation of cutting-edge models and unlock the next level of AI capabilities.

Post training and model customization and inference demands orders of magnitude more compute than training models. This is creating an expanding market of application specific models. This drives continuing demand for AI compute. The latest Nvidia GPU platforms provide markedly improved power efficiency (performance per watt) and speed response. The company’s performance and pace of innovation are unmatched, driving a 200x reduction in inference costs in just the last 2 years. Blackwell was architected for reasoning AI inference. Blackwell supercharges reasoning AI models with up to 25x higher token throughput and 20x lower cost versus Hopper 100. Blackwell has great demand for inference. Many of the early GB200 deployments are earmarked for inference, a first for a new architecture. Blackwell addresses the entire AI market from pretraining, post-training to inference across cloud, to on-premise, to enterprise.

CUDA’s programmable architecture accelerates every AI model and over 4,400 applications, ensuring large infrastructure investments against obsolescence in rapidly evolving market.

Therefore, the addressable market for Nvidia’s products continues is growing rapidly. The improved performance per cost of products continues to optimize ROI for customers. Customers can install new Nvidia hardware and enjoy continuing application software compatibility. The CUDA platform provides backwards compatibility, while supporting a wide and growing ecosystem of applications. It is the key element providing switching costs (customers are captive to the platform) competitive advantage. Competing chip providers cannot lure developers from the market dominating CUDA platform to their noncompatible hardware and software.

Parenthetically, upcoming generations of GPU (Blackwell Ultra) will launch in H2 of this year, to be followed by the Vera Rubin system. According to CEO Jensen Huang, datacenter GPU system hardware, power delivery and architecture changed considerably from Hopper to Blackwell and made the introduction of Blackwell challenging. The system architecture does not change from Blackwell to Blackwell Ultra. This could create an easier ramping of supply in the future, although that will be next year presumably.

Regarding valuation, in the year between Q4 FY 2024, in January 2024, and Q4 2025 in January 2025, total net revenue rose 114.2% from $60.922B to $130.497B. Diluted EPS rose 147.06% from $1.19 to $2.94 per share. Trailing PE fell from 50.65 to 47.92. Note that the Jan 2025 PE (at date of earnings release for Q4 2025) is less than the mean PE of 53.47 of the past decade from 2016

There was a recent drop in stock price, apparently in reaction to the revelation of DeepSeek R1 LLM. Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co., Ltd., does business as DeepSeek. It’s R1 LLM outperformed some leading LLMs created by US corporations, and was allegedly created for only $6 million, instead of the usually required hundreds of millions of dollars, using Nvidia chips which are not the most advanced available, and which are therefore permitted by US trade law to be exported to PRC. DeepSeek “raised alarms on whether America’s global lead in artificial intelligence is shrinking and called into question Big Tech’s massive spend on building AI models and data centers.”

As we know, until this moment, analysts had been anguishing over whether the unprecedented capex expense to acquire Nvidia GPU powered datacenters, required for production of AI, would allow adequate ROI. Now, the possibility of cheaper production appeared and provoked the reverse crisis: AI would be abundant, but there would be no need for the higher end, expensive Nvidia GPUs. Perhaps if the Communist totalitarian country had produced an LLM which was somewhat cheaper to produce, but not that much cheaper, a sort of happy medium. Then, investors might have taken the announcement with equanimity, since improved ROI of AI would happily coexist with some easing of capex.

The reality is, there will always be a new, cheaper, or more powerful chip, or other novel innovations in this rapidly developing industry. Some of these will improve business economics, others will not. Zooming out to gain some perspective, it is apparent that AI will in the course of time pervade the global economy in countless ways. Demand for the required compute hardware and software shows no sign of abating and will continue for some time. I doubt that the time has come to lower interest in Nvidia. Nvidia dominates the market for the GPUs which are indispensable for creating AI tools. And the accompanying , CUDA software platform contributes to the switching costs which maintain the ecosystem of application developers using Nvidia GPUs.

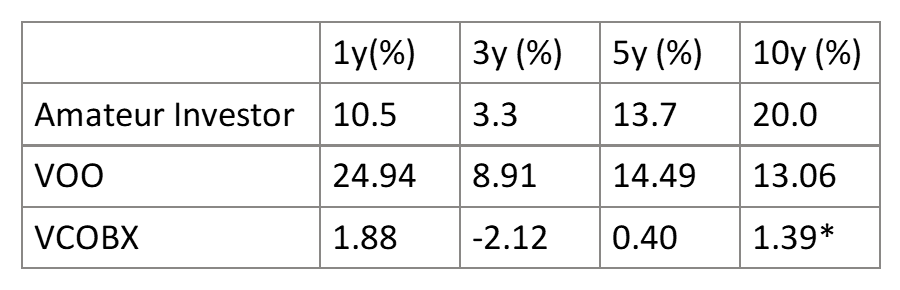

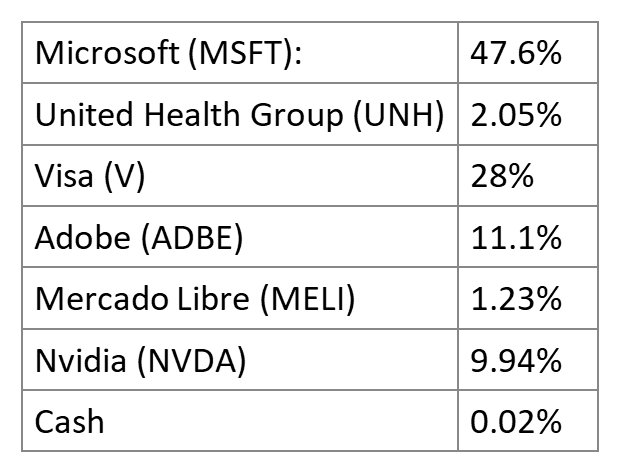

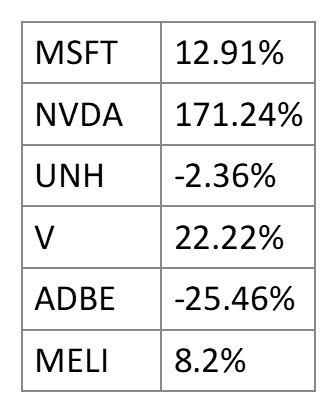

In view of the recent price drop exceeding a 20% discount from the 52 week high, accompanied by the reduction in PE ratio over the last year, I took the opportunity to transfer some portfolio allocation to Nvidia. I sold a very modest portion of MSFT holdings, in the range of 2%, at a weighted average of a 6.14% reduction from its 52 week high, and likewise for Visa, which was at its 52 week high. I bought NVDA stock at a weighted average of a 20.8% reduction from its 52 week high. Nvidia now makes up approximately 12% of my portfolio. I did not feel brave enough to buy more. Probably because of the issue of near term volatility. I feel safer buying smaller amounts during periods of recurrent price drops. The stock is after all, not grossly undervalued.